Will the Consumer AI Vertical Dominate the Next Decade?

Consumer AI might be the biggest technological wave of this decade, as everyday usage is soaring

We’re Past the Hype Curve. Now What?

You probably haven’t realized this but Consumer AI has already moved into your everyday life.

You might notice it in the kinds of tasks people offload without thinking, the way a student gets help structuring an assignment or a project manager leans on an assistant to untangle a messy brief.

In fact, more than half of adults in AI-mature markets now use general-purpose AI assistants weekly, and a meaningful share touches them daily. Usage is real though it doesn’t look like earlier tech waves.

PS: A concrete example of what “lowering the cost of building” looks like in practice.

Two non-coders used Lovable to ship an AI automation product that’s now doing $10k in monthly recurring revenue.

No engineers. No custom code. Just an idea turned into a working product.

That’s the pattern I’m seeing more often. People who used to be blocked at “building” are now shipping and monetizing.

We set up something special with the Lovable team for this community. An exclusive 20% discount for The AI Corner readers if you want to try it yourself ❤️

Three forces stand out right now. One is the struggle of AI monetization. Millions depend on AI for small but important jobs, yet only a small number pays for any AI consumer apps.

Another force is the pull of a single familiar assistant. People reach for the tool already in their routine, and everything else has to work harder to earn space.

A third pattern shows up in the workplace, where tools people buy for themselves drift into team workflows and eventually shape company spend, especially in areas tied to AI productivity.

Experts in the industry believe consumer AI will skyrocket in 2026. And this article will tell you exactly where the opportunity sits, what must be built, how to differentiate, and where durable value emerges for individuals.

Table of Contents

1. The Consumer AI Moment: Adoption Is Real, Monetization Isn’t (Yet)

2. How the Consumer AI Stack Is Actually Structured

3. What Real People Actually Do With AI (And What They Don’t)

4. The Default Tool Dynamic: Why General AI Assistants Own the Top of the Funnel

5. The Great Expansion: New Revenue Mechanics in Consumer AI

6. Consumer → Prosumer → Enterprise — The New GTM Ladder

7. Vibe Coding as a New Creation Primitive

8. Geography and Global Dynamics: What’s Different About This Wave

9. Where the Next Generation of Consumer AI Will Emerge

10. What This Means for Founders and Investors: A Practical Playbook

11. What the Next Decade Looks Like

1. The Consumer AI Moment: Adoption Is Real, Monetization Isn’t (Yet)

People use AI assistants the way they once used search engines; as a habitual first step whenever they need to make progress. AI adoption in mature markets is surging, and daily usage continues to climb across different age groups.

The pattern is consistent everywhere. Small tasks drive most activity, and those tasks compound quickly.

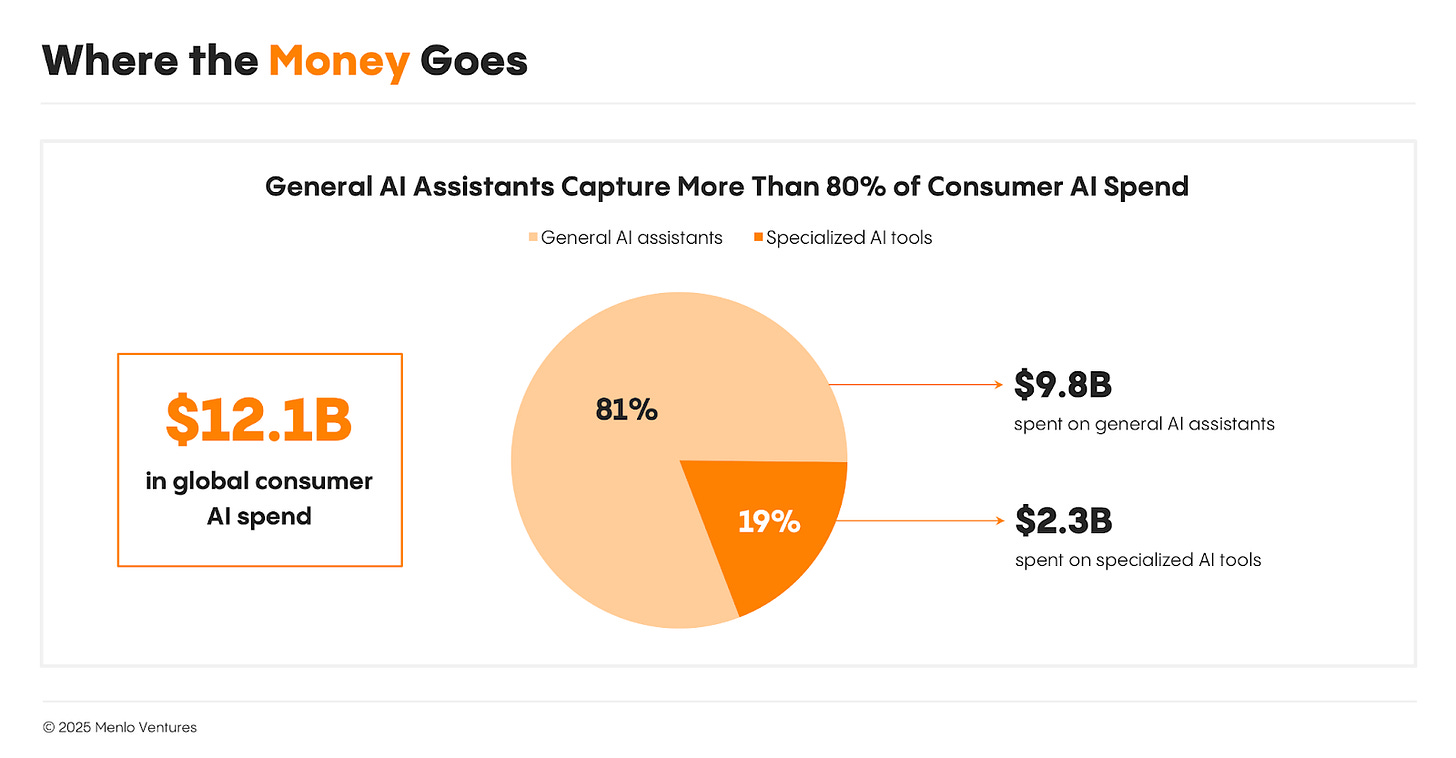

What stands out is how thin the revenue layer remains compared with the usage layer. You see this in the economics of AI consumer apps, where charts are crowded but the paying segment stays narrow.

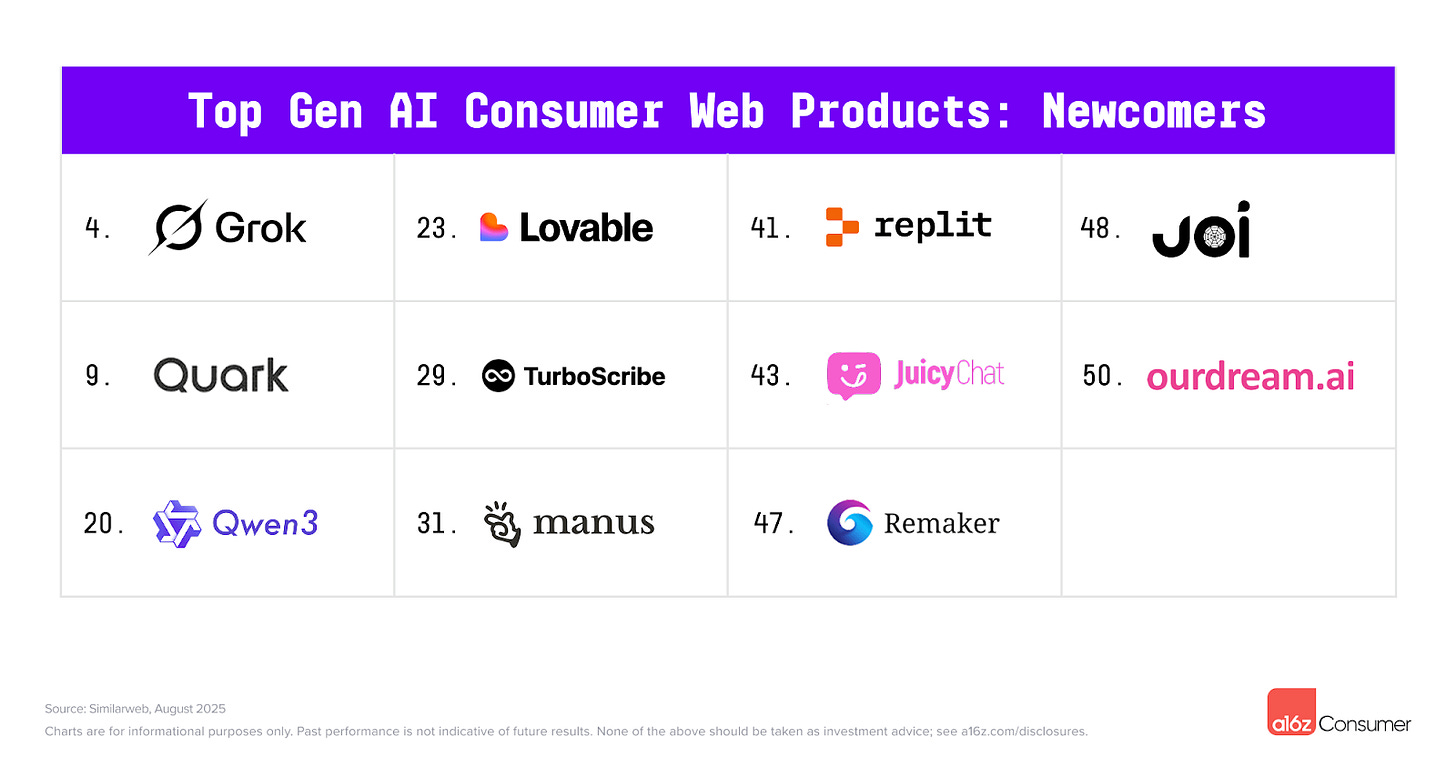

a16z’s spending data shows that the top consumer AI apps like Grok, Loveable, and Replit generate little direct revenue despite meaningful engagement. Dependence is rising faster than willingness to pay, which makes more sense once you observe real behavior.

Most sessions deliver quick wins instead of deep workflows. A tightened paragraph, a clarified note, a fast comparison of options, a study outline, a plan for the week. These moments are valuable, but they don’t always translate into subscription intent.

The tool becomes woven into someone’s day long before they consider paying for it. This tells us that the business model is still adjusting.

But this is not about weak demand. The landscape just hasn’t yet aligned with how consumers behave. Tools feel generic, memory is shallow, and pricing tiers rarely match intensity of use. Most products treat every user the same even though a minority drives the bulk of volume.

We use to predictive mental models for this:

Cognitive ROI - captures how much effort a tool removes.

Intent Fulfillment Rate - measures how often the assistant actually resolves the task without extra work.

Tools with high IFR earn trust faster and justify spend sooner. We’re early in that curve.

So the million-dollar question (literally) is who will design products and pricing that capture the value inside all those micro-moments of progress, moments that will eventually define the future of AI productivity.

2. How the Consumer AI Stack Is Actually Structured

Most conversations about consumer AI flatten the landscape into “apps on top of models.” But that framing hides what users actually experience. From their perspective, the stack splits into two layers shaped by how people think, not how the technology works.

The Horizontal Layer: The Default Tools People Live In

Horizontal tools feel like the backbone of consumer AI because they show up across dozens of contexts. They act as a “home base” where general-purpose assistants handle everything from low-stakes triage to complex research by instinct. This is the first stop for writing help, small decisions, simple research, and triage when someone feels stuck.

Think ChatGPT, Gemini, Claude, Grok etc.

Surrounding this core are AI-native workspaces and creative suites that transform vague intent into structured artifacts such as documents, visuals, or media, and without the need to switch between fragmented apps.

Think Notion, Canva, ElevenLabs, Midjourney etc.

This layer is now expanding into “vibe coding” environments, where both developers and non-technical users bypass traditional syntax to build software and automations through pure description. By reducing the mental overhead of creation, these tools have become the default starting point for turning ideas into functional reality.

Think Lovable, Cursor, Replit etc.

The Vertical Layer: Tools Designed to Do One Job Well

The vertical layer looks different. Each product has a clear target user and a defined job. A sales outreach assistant handles one workflow. A recruiting tool handles another. Customer support copilots, compliance helpers, and operations automation platforms follow the same pattern. They don’t aim for broad coverage, but for depth.

These are tools like Ada, Crisp, Clay, Applaud and Delve.

The difference isn’t the interface but the level of specialization. Users turn to vertical tools when general-purpose assistants start feeling thin. They need deeper context, richer memory, tighter constraints, or more predictable output. Vertical tools thrive where stakes are higher and workflows repeatable.

Augmentors and AI Employees: A Useful Split

A second axis clarifies how tools fit into daily life. Some behave like augmentors, supporting the human as they work. Others behave more like “AI employees”, taking a workflow and running it end to end.

Augmentors sit mostly in the horizontal layer as they help you think, write, plan, or create. AI employees appear more often in the vertical layer, as they handle outreach, triage, or coordination without requiring step-by-step guidance.

Most of the market still leans toward augmentation. Full AI employees will grow as trust and reliability improve, but today people prefer tools that keep them in the loop. It’s a reminder that user psychology shapes product structure as much as model capability.

The above structure shows you where your product fits and explains why users switch tools.

3. What Real People Actually Do With AI (And What They Don’t)

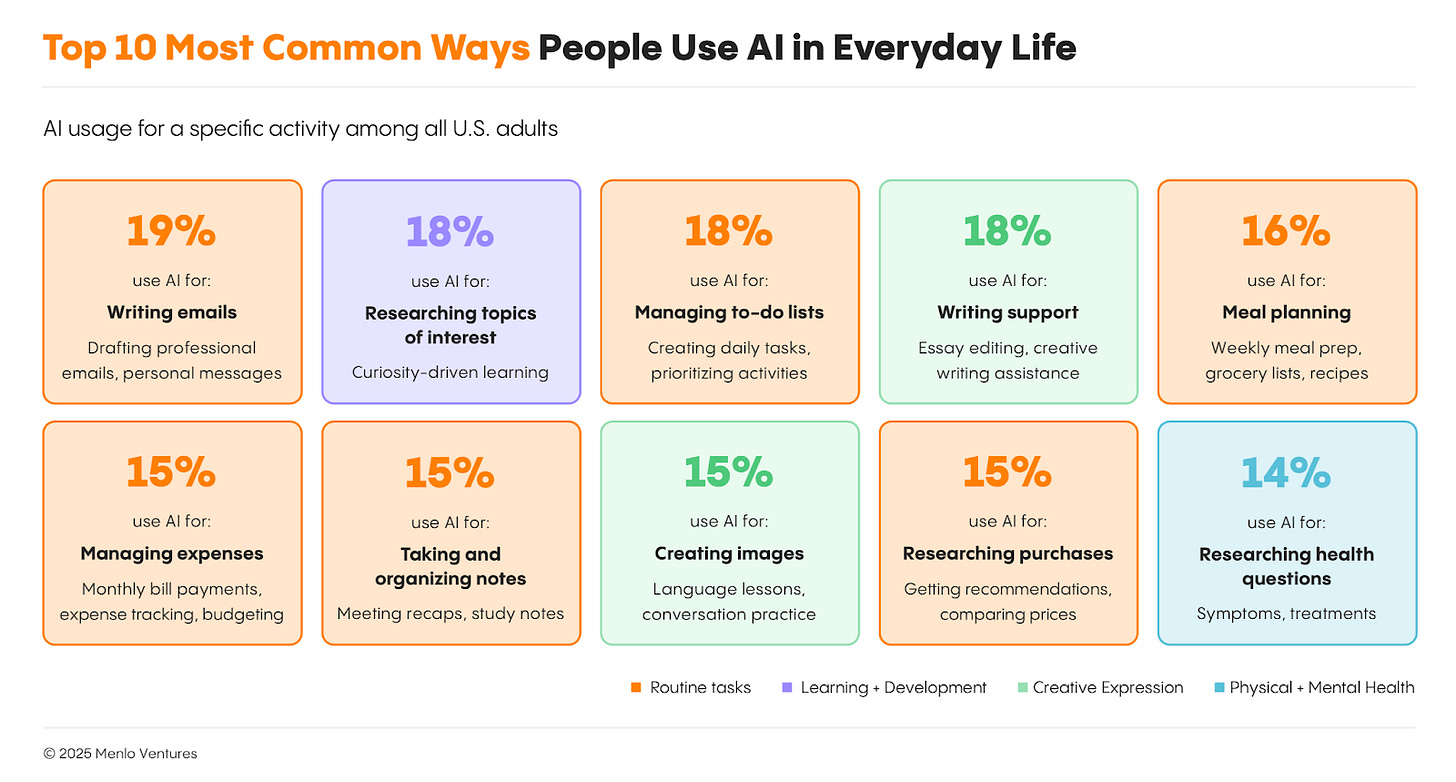

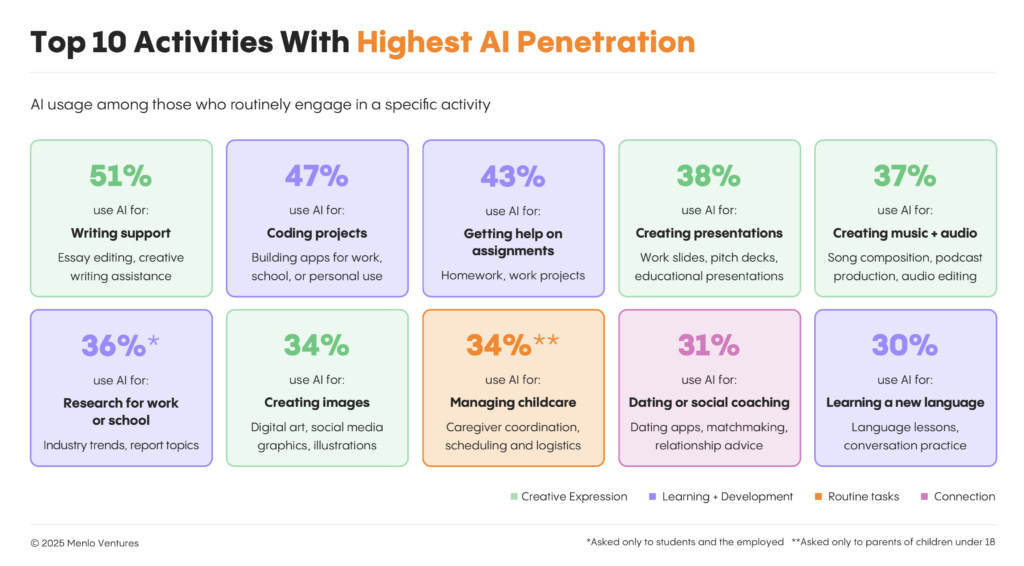

Menlo Ventures’ State of Consumer AI report reveals that usage grows out of small, ordinary decisions. People reach for an assistant when they feel stuck, tired, or short on time, not because they want to “use AI.”

The tasks are simple, the stakes are low, and the value shows up in moments where friction fades. That layer of behavior explains most of AI adoption so far, even though it’s easy to underestimate.

Usage now spans a wider range of people than early observers expected. Heavy use shows up with students, but also with working parents planning chaotic weeks, freelancers managing admin load, and mid-career professionals handling constant writing and coordination.

Many of them wouldn’t describe themselves as “AI users.” They simply found something that helped them move faster and kept returning to it.

Everybody felt this moment of relief after a good result where they thought “That would’ve taken me 3 hours.” And thus, a habit is created.

AI as a Cognitive Extension

Something deeper sits beneath the surface metrics. Users don’t relate to these tools the way they relate to traditional software. Over time, the assistant starts to feel like a mental add-on, helping with planning, comparisons, rewriting, and the overhead that makes a task feel heavy.

Traditional automation lifts steps out of a process, but here, the assistant sits beside you, reducing effort.

That’s where Cognitive ROI is useful. People come back to the tools that consistently reduce the energy required to reach clarity or produce something usable.

And when an assistant turns a vague intention into a working output, its Intent Fulfillment Rate rises. High IFR is one of the cleanest signals that a tool is moving from novelty to real AI productivity.

What People Avoid, For Now

Comfort doesn’t extend everywhere. When the topic turns to personal health, major financial choices, emotionally loaded conversations, or high-stakes logistics, people fall back on human judgment. Accuracy matters, but emotional risk matters more. These areas will eventually see deeper consumer AI penetration, but they require richer memory, more context, and stronger guarantees than today’s tools offer.

4. The Default Tool Dynamic: Why General AI Assistants Own the Top of the Funnel

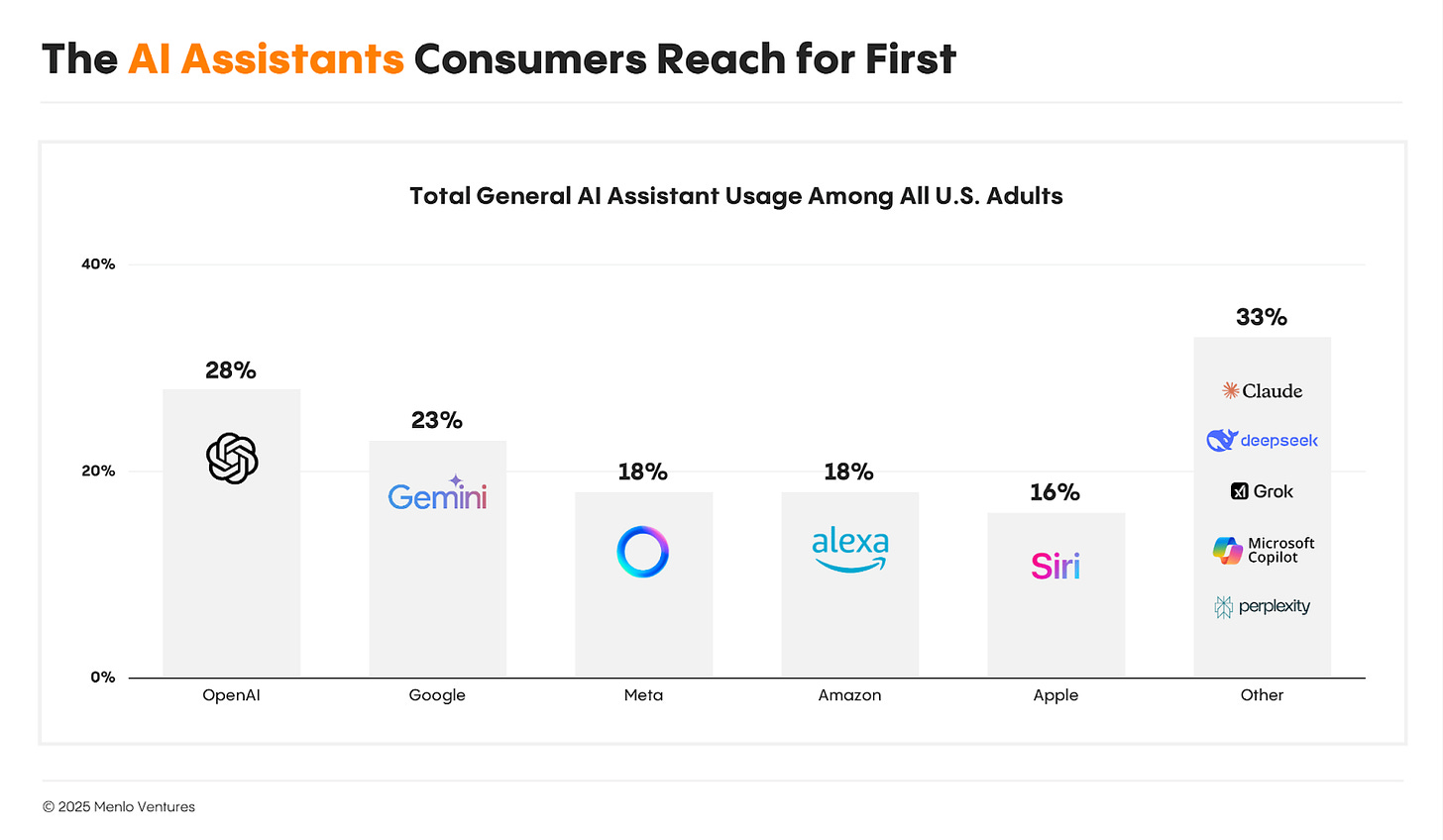

Most people don’t juggle a stable of AI consumer apps. They rely on one assistant that feels familiar and available when they need help. This default-first behavior determines which products get tried, which ones get forgotten, and which ones end up capturing most of the revenue.

The general-purpose assistant wins the first interaction because it lives in the user’s dock or browser, becoming a convenience reflex rather than a conscious choice. Over time, it becomes the anchor for every task because it feels safer and easier to fit into a daily flow.

Users only explore specialized alternatives when their default assistant fails repeatedly. Small frustrations like fuzzy explanations, hallucinations or usage limits must accumulate before someone reaches the “good enough until it isn’t” threshold.

This is why being slightly better than a general tool is not enough for a new product to succeed. Specialized apps must be significantly better in a way that feels meaningful to daily life, or the pull of the default will remain too strong to break.

Over time, the assistant ends up becoming the gateway to everything else. As they gain memory, context, and embedded integrations, they start routing tasks to other tools. Distribution begins to move away from app stores and search toward a world where assistants control the surface area.

This trend already appears in usage and spending data. a16z’s AI Application Spending Report shows that horizontal applications, led by general-purpose AI assistants like Google, OpenAI and Anthropic, account for the majority of AI application spend among startups, a sign that the tools closest to user intent are capturing most of the early revenue.

When an assistant can call tools inside or outside its environment, it becomes a distribution layer on its own. For builders in the AI space, that means the assistant may soon matter more than the channel.

The products closest to user intent capture the most dollars. People trust the tool they start with, and they’re far more likely to subscribe to something that already fits into their routine. Specialized apps must convince users to adopt a new habit as well as solve a problem.

It’s never about product quality, it’s about how people behave when they’re managing cognitive load. If the default gets them close to the outcome, they don’t feel urgency to switch. That inertia is powerful, and it raises the bar for new entrants.

5. The Great Expansion: New Revenue Mechanics in Consumer AI

Traditional consumer software was built on the logic of engagement, where value was tied to time spent in-app. And since satisfaction now comes from rapid resolution rather than duration, the whole model is disrupted.

A successful AI session might last only 20 seconds, making traditional metrics like “minutes per user” a poor indicator of value. When business structures fail to account for this efficiency, they face a mismatch between the value delivered and the revenue captured.

Static subscription tiers also struggle to keep up with unpredictable usage patterns. Most users experience sharp spikes in activity followed by long quiet periods, yet flat plans treat every user the same.

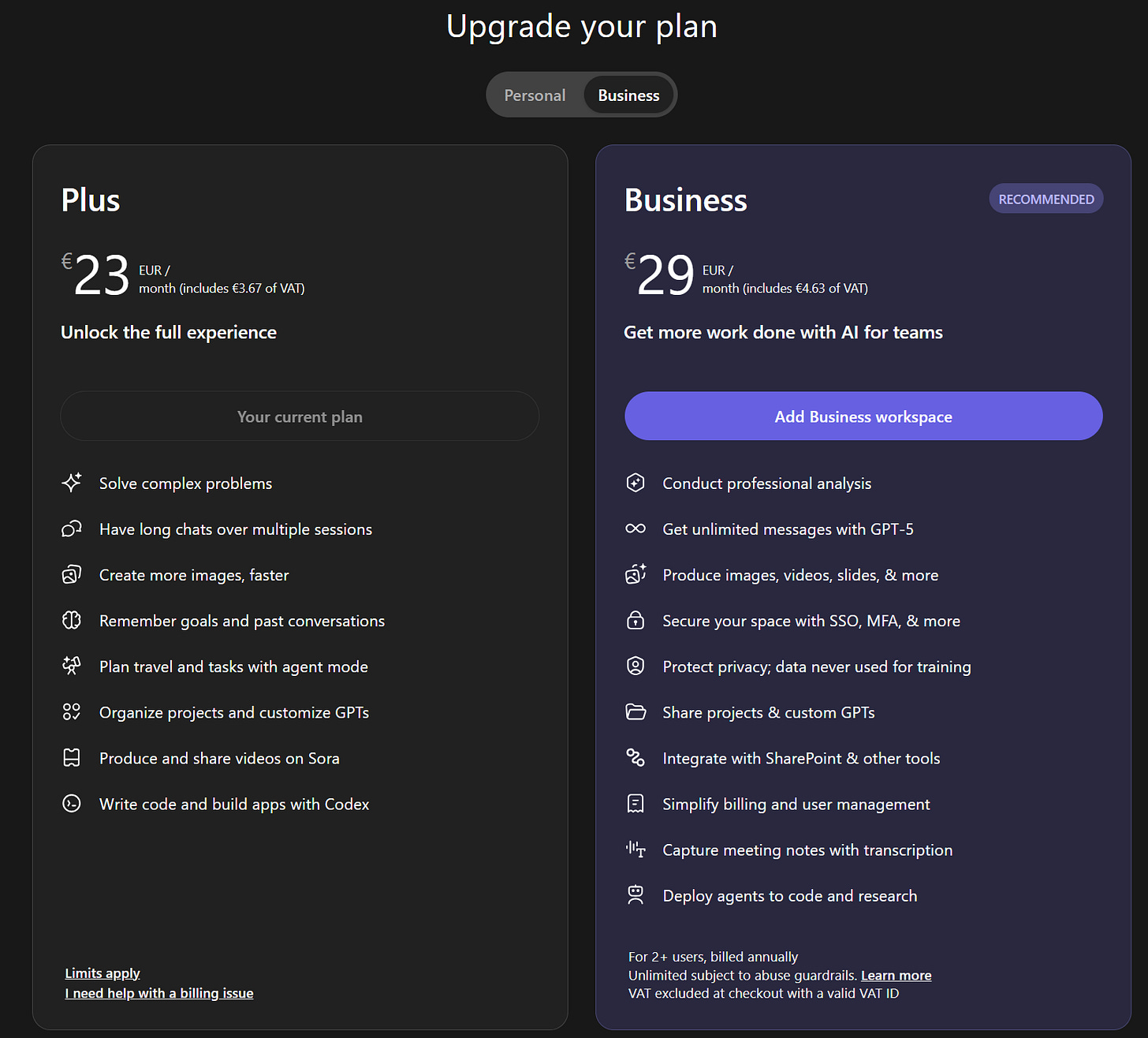

This creates a ceiling for power users and a barrier for casual ones. The industry is transitioning toward hybrid pricing. That means a base subscription for consistent access paired with usage-based extensions for higher speed or reasoning capacity.

This model allows the product to grow with the user, reducing churn by remaining light when usage is low and becoming powerful when reliance deepens.

A significant multiplier in this new economy will be the spillover from personal use to professional workflows. This is a bottom-up pull motion where individuals bring proven tools into their workplaces.

When a personal assistant moves into a team environment, the lifetime value jumps as the requirement shifts toward collaboration, security, and admin controls.

Data shows that many tools once categorized as personal apps now sit inside enterprise budgets because they proved their worth at the individual level first.

Ultimately, revenue in the AI era is becoming less about broad acquisition and more about deepening the leverage found within existing users.

Success is predicted by the Intent Fulfillment Rate, which is the consistency with which a tool resolves a task without extra work.

The priority for builders is no longer just finding more users, but helping current users find more leverage. The winning products will be those that transition seamlessly from personal wedges into essential enterprise infrastructure, capturing value at every step of the climb.

6. Consumer → Prosumer → Enterprise — The New GTM Ladder

Most consumer AI products don’t begin with a sales team. They begin with a person who finds a tool that actually solves a problem better than whatever they had before. That small moment of adoption ends up defining the entire go-to-market path. Here’s how that looks like in practice.

Stage 1: Consumer — The Spark Point

The journey begins with a frictionless “spark” where a user attempts a task and receives a result that is noticeably sharper or faster than previous methods. In this layer, success depends on low cognitive load and the assistant’s ability to grasp intent rather than just literal phrasing.

Trust is not built through complex features but through the consistency of getting small things right. Once a tool proves it can reliably remove friction from daily life, it transitions from a casual experiment into a permanent habit.

Stage 2: Prosumer — Where Depth Begins

As users master the tool, a portion of them naturally graduates into more demanding workflows that require richer context and technical depth. This prosumer segment serves as the revenue anchor because these users prioritize speed, higher limits, and advanced reasoning over basic access.

Reliance grows with exposure, turning curiosity into a necessity that justifies a paid tier. This stage is also a critical laboratory for builders, as the demand for shared context and version control among power users provides the first blueprint for what a team-based product should look like.

Stage 3: Enterprise — When the Tool Becomes Infrastructure

Enterprise adoption in the AI era is driven by repetition rather than traditional sales cycles. When enough individuals within an organization independently rely on a tool to ship work faster, the product is pulled into the official company stack.

At this point, the AI tool stops being about personal convenience and becomes organizational leverage, requiring the addition of administrative controls, audit logs, and compliance layers.

The tool effectively becomes infrastructure because it has already proved its value within the actual workflow of the team.

Why This Ladder Works So Well for AI

AI accelerates the traditional SaaS journey by delivering value in minutes rather than weeks. This immediacy creates a “pressure” dynamic, where one person uses AI to raise their baseline quality or speed, and the rest of the team feels an imbalance that pushes the entire organization to adopt the same tool.

Because the tool automates the most difficult parts of a workflow, the distance between a casual user and an enterprise advocate is shorter than ever. The product is then pulled upward by users who have already integrated it into their professional identities.

If you treat this ladder as a progression rather than a plan, the strategy becomes clearer. You’re not forcing the product into a segment; you’re following the contour of how people adopt AI in the real world.

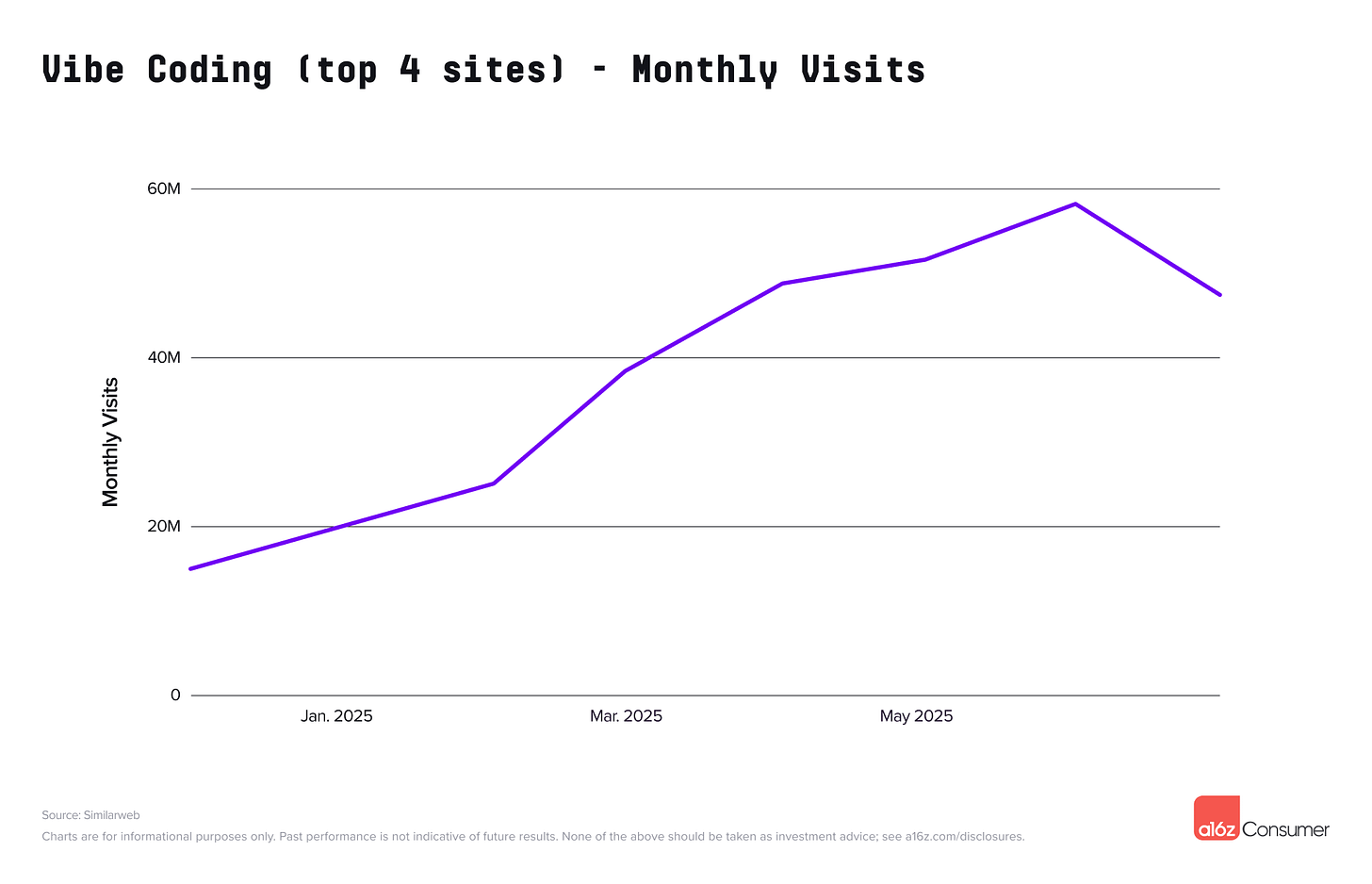

7. Vibe Coding as a New Creation Primitive

Every major evolution in computing comes with a new way to make things. DOS had command lines. The web had HTML. Mobile had touch-first interactions.

AI now allows building through intent instead of syntax through vibe coding.

Why Intent Becomes the Interface

Software creation has historically required a grueling translation process, moving from a human idea to a rigid structure and then into machine-readable syntax. Vibe coding removes this cognitive friction by allowing the user to describe the desired behavior, constraints, and tone in natural language while the AI handles the scaffolding.

This is highlighting a trend in AI adoption where users gravitate toward tools that reduce mental overhead. By focusing on intent rather than the “blueprint,” creators can sketch ideas in real-time, letting the model manage the underlying technical abstractions.

Opening the Door to New Creators

Just as Canva democratized professional design, vibe coding is opening software development to a vast new class of autonomous creators.

Individuals who understand their specific workflows but lack formal programming skills can now build custom helpers, research tools, and local automations to solve immediate problems.

The goal isn’t to make everyone a “developer,” but to provide the agency to turn a thought into a functional tool in an afternoon. As these users realize they can build one working automation, they quickly begin imagining dozens more, driving a quiet but massive expansion of productivity at the edges of every industry.

How Developers Use It Differently

For experienced engineers, vibe coding is not a replacement for their craft but a powerful form of acceleration. While novices use it to bypass syntax they don’t know, experts use it to prompt out scaffolds and refactor structures at a speed manual typing cannot match.

This creates a unique duality where the technology lowers the floor for beginners while simultaneously raising the ceiling for professionals. AI-native environments are succeeding because they don’t automate away the engineering logic; they automate the busywork, allowing developers to remain architects of the system while the AI serves as the hands.

Why Companies Are Paying Attention

Menlo’s report suggests that deep-value spaces (agentic tools, building environments) could attract rising spending once they deliver on trust, memory, and context.

The corporate interest in vibe coding stems from its ability to unify building, hosting, and execution into a single conversational flow.

Teams are already using these environments to assemble internal data helpers and support scripts that previously would have languished in engineering backlogs for months. This significantly alters the economics of internal tooling, giving small teams the kind of leverage usually reserved for large, engineering-heavy organizations.

Because these tools deliver high-value results with minimal friction, monetization is quickly transitioning toward hybrid, usage-linked models that capture the value of this newfound organizational speed.

The Open Strategic Question

Will the market fragment into specialized vibe coding tools for specific tasks like video or data, or will a few general-purpose “operating systems” absorb the majority of this behavior?

Currently, usage patterns are varied, with some users preferring the precision of niche agents while others stick to broad environments that handle the entire project lifecycle.

Regardless of the eventual winner, vibe coding has proven to be incredibly sticky behavior. Once a creator builds something meaningful within a specific environment, the cost of switching becomes high, even if the underlying AI models are essentially interchangeable.

Vibe coding isn’t a gimmick. It’s becoming a foundation for how people will build with AI at every level.

Whenever the cost of creation falls this sharply, new categories form, and in AI, they form fast because AI assistants already sit in the center of workflow intent.

8. Geography and Global Dynamics: What’s Different About This Wave

Consumer AI didn’t follow the usual pattern of innovation trickling outward from a few hubs. It spread everywhere at once.

The moment models became accessible, people across regions began experimenting with AI assistants, creative tools, and lightweight automation in parallel. The global map for this wave looks like a network lighting up node by node.

Local Constraints Create Local Champions

Unlike previous tech waves that trickled out from Silicon Valley, consumer AI has ignited globally and simultaneously. Local constraints such as specific regulatory guardrails, data rules, and unique payment infrastructures actually drive innovation.

Regional teams are building sharply optimized tools that integrate deeply with their home markets, often outperforming global platforms by addressing cultural nuances or local workflow gaps.

Interestingly, these tools frequently find accidental global success. Say a product built to solve a specific regional friction often resonates with a worldwide audience, flipping the traditional export script.

Language and Culture Shape Product Strengths

A massive portion of global AI usage is multilingual, shifting the competitive advantage toward teams that understand regional linguistic nuances. In creative and communication-heavy applications, literal accuracy is often less important than cultural resonance and tone.

As users increasingly rely on AI for translation, messaging, and summaries, tools that offer deep cultural alignment become indispensable. What begins as a regional specialty eventually creates a global pull, as users gravitate toward assistants that finally feel like they “understand” the context behind the prompt.

Emerging Capability Clusters

Distinct regional strengths are emerging quietly based on local talent and demand. Some markets are becoming hubs for advanced video and visual tools due to aggressive experimentation by local creators, while others specialize in AI-driven education where traditional systems have left gaps.

This multi-polar landscape suggests that the future of AI will not be dominated by a single global winner. Instead, several gravitational centers are forming at once, offering diverse paths to monetization.

For builders, this means success is found by serving a specific segment so deeply that the product becomes infrastructure; from there, global expansion becomes a natural byproduct of utility rather than a forced marketing goal.

9. Where the Next Generation of Consumer AI Will Emerge

While the current market is saturated with general assistants, the next frontier of consumer AI lies in High-Context Continuity.

Breakout value will emerge in “unsolved” verticals like health, finance, and caregiving. Most experts consider those as areas where users have high emotional stakes but current tools lack the longitudinal memory to be truly useful.

Success in these categories requires moving beyond the “transactional” model to products that recognize patterns over months, not just minutes.

The Practical Strategy of Depth

The immediate opportunity for builders is to transition from general-purpose answers to relational systems across key lifestyle verticals. That’s where you need to invest, or build.

Health and Finance

These sectors suffer from a “Trust and Context Gap.” People don’t want a generic calculator; they want a companion that notices patterns in their wellness routines or irregular income over long arcs of time.

Family Logistics and Caregiving

Families require “context stitching,” not just calendars. Value sits in tools that understand dynamics between people, anticipate friction, and manage the invisible emotional labor of coordinating care.

Home Maintenance and Learning

True AI productivity in the home involves a “Reality Interface” that tracks warranties and repair histories to predict maintenance. Similarly, in education, the winners will move beyond quick definitions to “scaffolding” that recognizes a learner’s specific plateaus and blind spots over years.

The next generation of breakout companies will be defined by Intentional Sensitivity. That’s the ability to understand boundaries, tone, and personal history.

In high-stakes life categories, trust is built through continuity. Founders should focus on building “memory-first” architectures; when the stakes are emotional, financial, or relational, a generic tool feels thin, but a tool with deep personal history becomes indispensable infrastructure.

10. What This Means for Founders and Investors: A Practical Playbook

Once you map how people actually behave with consumer AI, the strategy comes into focus. This market won’t keep rewarding broad surfaces or clever branding. It will reward depth, leverage, and a clean line between intent and fulfillment.

The companies that break out will understand how users climb from casual to committed, and how products evolve with them.

Choosing Where to Play: Horizontal or Vertical

Every product sits somewhere between broad capability and specific expertise. The decision isn’t philosophical; it’s tied to the user’s day. Horizontal tools serve many small touchpoints. Vertical tools win when the job is stable, repeatable, and context-heavy.

The test is simple: Can a default assistant do this well enough, or does the user hit a wall every time they try?

If the wall is real, a vertical wins. If not, the horizontal has the advantage. It’s a distribution-of-value question, not a model-capability question.

Designing Differentiation Against Default Assistants

Every product in AI consumer apps competes with something already sitting in the user’s phone or browser. Since most AI assistants run on similar foundation models, differentiation comes from shaping the input instead of the output.

Long-term memory, workflow scaffolding, trusted context, and deeper alignment with the person’s routines become the real differentiators.

Founders should obsess over the moment where the default tool fails. That moment becomes the wedge. If the user hits it often enough, your product becomes the reliable path forward.

Building Pricing That Expands With the User

Most users start light and grow into heavier workflows, and pricing should match that trajectory. A low-friction entry plan builds comfort. The next tier should feel like an obvious productivity unlock. Upper tiers should feel like infrastructure for the people who rely on the tool daily.

This isn’t about pushing upgrades, but matching spend to intensity.

Sequencing Features Across the Consumer → Prosumer → Enterprise Ladder

AI products grow through pull, not push. Each stage expects something different:

Consumer layer: clarity, speed, and low cognitive load. Remove friction, simplify decisions, and earn trust.

Prosumer layer: deeper controls, richer memory, better context handling, and fast iteration. This is where AI productivity becomes a selling point.

Enterprise layer: collaboration spaces, governance, identity management, and deployment safety. By the time a team cares, the tool has already proven itself in individual workflows.

Building Defensibility Beyond the Model

Models converge. Features get copied. So naturally, the durable moat becomes the relationship with the user. That comes through memory, continuity, personalized data structures, and the way the product understands patterns over time.

AI Defensibility comes from becoming the place where users keep their work-in-progress thinking, not just their prompts. That depth is hard to clone because it grows from months of interaction, not from a fresh model checkpoint.

11. What the Next Decade Looks Like

The next decade of consumer AI will not be defined by the novelty of models, but by the maturity of products. We have moved past the era of the “single query” and into a phase where trust is earned through continuity, longitudinal memory, and the ability to handle the messy follow-through of real life.

As individual habits harden into professional reliance, the products that age well will be those that behave less like polished prototypes and more like essential companions.

Breakout success now depends on capturing the “portable trust” that forms when an assistant helps a user navigate a stressful decision or a complex workflow without adding friction.

Whether through prosumer depth or the quiet spillover into workplace infrastructure, the winners will be the ones that fit the natural grain of human behavior, through interruptions, shifting priorities, and all.

The field is still settling, so the opportunity is massive. And the future belongs to tools that offer a steady, familiar presence and turn micro-moments of assistance into durable AI productivity.