R.I.P. Basic Prompting

MIT just dropped a technique that changes how AI actually reasons

A new paper from MIT CSAIL introduces a simple but powerful shift: instead of forcing AI to answer once, make it reason like a system that can inspect, decompose, and verify its own work before committing.

The technique is called Recursive Language Models (RLMs).

It makes ChatGPT reason more like a review panel than a single confident voice, and it delivers materially better results than standard prompts, with reported gains above 100%.

Inside this article

If you use AI for anything that matters, this is the piece to bookmark.

Here is exactly what you will get:

The core insight in one line

Why “long context” fails, and why RLMs fix the real bottleneck.The mental model that upgrades your prompting overnight

How to treat the prompt as an external environment the model can inspect and work over.When to use this vs normal prompting

A simple decision rule so you do not overengineer easy tasks.The tangible prompt you can use today (copy paste)

An RLM-style operating prompt that forces decomposition, extraction, verification, and explicit uncertainty.What the paper actually changes in product terms

Why this is inference-time architecture, not prompt tricks.Where RLMs beat summarization and RAG

The exact failure modes they solve.The blueprint for implementing this in real systems

REPLs, recursion, and selective context access.The second-order effect

Why the next wave of AI products will compete on context management, not model choice.

The one-line idea

Most LLM failures are not reasoning failures.

They are context management failures.

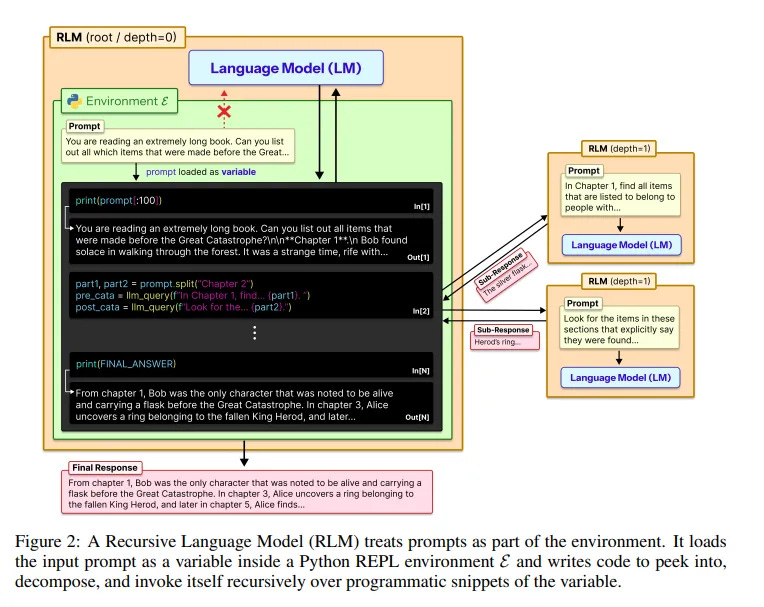

RLMs fix this by moving the prompt out of the model and letting the model interact with it programmatically.

Instead of dumping everything into the context window, the model:

• Inspects the input

• Slices it into relevant parts

• Calls itself recursively on only what matters

• Verifies intermediate results

• Synthesizes a final answer

This is why RLMs handle inputs orders of magnitude larger than the model’s context window, and still improve quality even on shorter prompts.

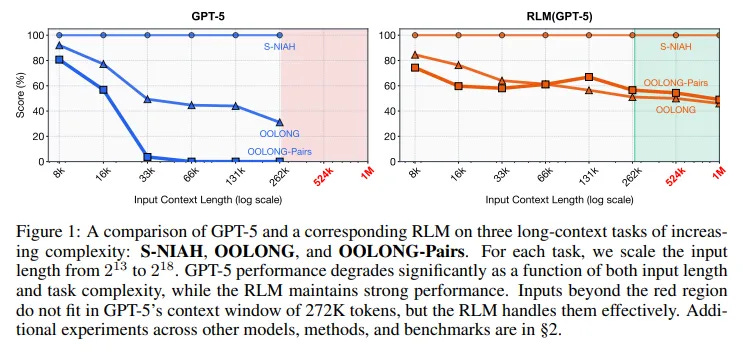

Why “just increase context length” does not solve this

Even frontier models show context rot.

As inputs get longer and tasks get more complex, performance degrades fast. Not because the model forgets everything, but because it cannot selectively reason over dense information.

Summarization helps, but it throws away details.

Retrieval helps, but dense tasks often need many interdependent parts.

Bigger windows help, but degradation still happens.

RLMs attack the root cause: how context is accessed, not how big it is.

What changes in practice

Before (basic prompting)

You paste everything.

You ask one question.

The model answers once.

It sounds confident.

You trust it.

If it is wrong, you usually never know.

After (RLM mindset)

You give the model a workspace and rules:

Inspect the corpus

Decompose the problem

Solve sub-parts independently

Verify logic and assumptions

Commit only when confidence is high

This feels less like chatting and more like working with a junior analyst who shows their work.

When you should use this

Use an RLM-style workflow when at least one of these is true:

• The input is long or growing

• The answer depends on many parts, not one

• You care more about correctness than speed

• You are doing research, diligence, strategy, or codebase understanding

For short, simple questions, basic prompting is still faster and often better.

The tangible prompt you can use today

You cannot fully recreate the paper’s REPL-based system inside a plain chat box.

But you can steal the operating pattern.

Copy paste this:

Keep reading with a 7-day free trial

Subscribe to The AI Corner to keep reading this post and get 7 days of free access to the full post archives.